Abstract

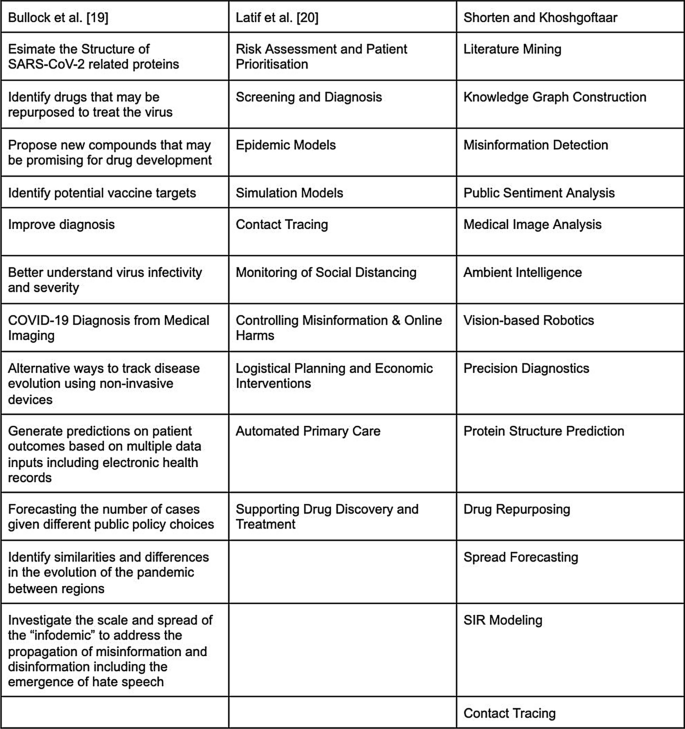

This survey explores how Deep Learning has battled the COVID-19 pandemic and provides directions for future research on COVID-19. We cover Deep Learning applications in Natural Language Processing, Computer Vision, Life Sciences, and Epidemiology. We describe how each of these applications vary with the availability of big data and how learning tasks are constructed. We begin by evaluating the current state of Deep Learning and conclude with key limitations of Deep Learning for COVID-19 applications. These limitations include Interpretability, Generalization Metrics, Learning from Limited Labeled Data, and Data Privacy. Natural Language Processing applications include mining COVID-19 research for Information Retrieval and Question Answering, as well as Misinformation Detection, and Public Sentiment Analysis. Computer Vision applications cover Medical Image Analysis, Ambient Intelligence, and Vision-based Robotics. Within Life Sciences, our survey looks at how Deep Learning can be applied to Precision Diagnostics, Protein Structure Prediction, and Drug Repurposing. Deep Learning has additionally been utilized in Spread Forecasting for Epidemiology. Our literature review has found many examples of Deep Learning systems to fight COVID-19. We hope that this survey will help accelerate the use of Deep Learning for COVID-19 research.

Access sharable link: Here

Introduction

SARS-CoV-2 and the resulting COVID-19 disease is one of the biggest challenges of the 21st century. At the time of this publication, about 43 million people have tested positive and 1.2 million people have died as a result [1]. Fighting this virus requires heroism of healthcare workers, social organization and technological solutions. This survey focuses on advancing technological solutions, with an emphasis on Deep Learning. We additionally highlight many cases where Deep Learning can facilitate social organization such as Spread Forecasting, Misinformation Detection, or Public Sentiment Analysis. Deep Learning has gained massive attention by defeating the world champion at Go [2], controlling a robotic hand to solve a Rubik’s cube [3], and completing fill-in-the-blank text prompts [4]. Deep Learning is advancing very quickly, but what is the current state of this technology? What problems does Deep Learning have the capability of solving? How do we articulate COVID-19 problems for the application of Deep Learning? We explore these questions through the lens of Deep Learning applications fighting COVID-19 in many ways.

This survey aims to illustrate the use of Deep Learning in COVID-19 research. Our contributions are also follows:

- This is the first survey viewing COVID-19 applications solely through the lens of Deep Learning. In comparison with other surveys on COVID-19 applications in Data Science or Machine Learning, we provide extensive background on Deep Learning.

- For each application area surveyed, we provide a detailed analysis of how the given data is inputted to a deep neural network and how learning tasks are constructed.

- We provide an exhaustive list of applications in data domains such as Natural Language Processing, Computer Vision, Life Sciences, and Epidemiology. We particularly focus on work in Literature Mining for COVID-19 research papers, compiling papers from the ACL 2020 NLP-COVID workshop.

- Finally, we review common limitations of Deep Learning including Interpretability, Generalization Metrics, Learning from Limited Labeled Data, and Data Privacy. We describe how these limitations impact each of the surveyed COVID-19 applications. We additionally highlight research tackling these issues.

Our survey is organized into four primary sections. We start with a “Background” on Deep Learning to explain the relationship with other Artificial Intelligence technologies such as Machine Learning or Expert Systems. This background also provides a quick overview of SARS-CoV-2 and COVID-19. The next section lists and explains “Deep Learning applications for COVID-19”. We organize surveyed applications by input data type, such as text or images. This is different from other surveys on COVID-19 that organize applications by scales such as molecular, clinical, and society-level [5, 6].

From a Deep Learning perspective, organizing applications by input data type will help readers understand common frameworks for research. Firstly, this avoids repeatedly describing how language or images are inputted to a Deep Neural Network. Secondly, applications working with the same type of input data have many similarities. For example, cutting-edge approaches to Biomedical Literature Mining and Misinformation Detection both work with text data. They have many commonalities such as the use of Transformer neural network models and reliance on a self-supervised representation learning scheme known as language modeling. We thus divide surveyed COVID-19 applications into “Natural Language Processing”, “Computer Vision”, “Life Sciences”, and “Epidemiology”. However, our coverage of applications in Life Sciences diverges from this structure. In the scope of Life Sciences, we describe a range of input data types, such as tabular Electronic Health Records (EHR), textual clinical notes, microscopic images, categorical amino acid sequences, and graph-structured network medicine.

The datasets used across these applications tend to share the common limitation of size. In a rapid pandemic response situation, it is especially challenging to construct large datasets for Medical Image Analysis or Spread Forecasting. This problem is evident in Literature Mining applications such as Question Answering or Misinformation Detection as well. Literature Mining data is an interesting situation for Deep Learning because we have an enormous volume of published papers. Despite having such a large unlabeled dataset, downstream applications such as question answering or fact verification datasets are extremely small in comparison. We will continually discuss the importance of pre-training for Deep Learning. This paradigm relies on either supervised or self-supervised transfer learning. Of core importance, explored throughout this paper, is the presence of in-domain data. Even if it is unlabeled, such as a biomedical literature corpus, or slightly out-of-domain, such as the CheXpert radiograph dataset for Medical Image Analysis [7], availability of this kind of data is paramount for achieving high performance.

When detailing each application of Deep Learning to COVID-19, we place an emphasis on the representation of data and the task. Task descriptions mostly describe how a COVID-19 application is constructed as a learning problem. We are solely focused on Deep Learning applications, and thus we are referring to representation learning of raw, or high-dimensional data. A definition and overview of representation learning is provided in “Background” section. The following list quickly describes different learning variants found in our surveyed applications:

- Supervised Learning optimizes a loss function with respect to predicted and ground truth labels. These ground truth labels require manual annotation.

- Unsupervised Learning does not use labels. This includes clustering algorithms that look for intrinsic structure in data.

- Self-Supervised Learning optimizes a loss function with respect to the predicted and ground truth labels. Differently from Supervised Learning, these labels are constructed from a separate computing process, rather than human annotation.

- Semi-Supervised Learning uses a mix of human labeled and unlabeled data for representation learning.

- Transfer Learning describes initializing training with the representation learned from a previous task. This previous task is most commonly ImageNet-based supervised learning in “Natural Language Processing” or Internet-scale language modeling in “Computer Vision”.

- Multi-Task Learning simultaneously optimizes multiple loss function, usually either interleaving updates or applying regularization penalties to avoid conflicting gradients from each loss.

- Weakly Supervised Learning refers to supervised learning with heuristically labeled data, rather than carefully labeled data.

- Multi-Modal Learning describes representation learning in multiple data types simultaneously, such as images and text or images and electronic health records.

- Reinforcement Learning optimizes a loss function with respect to a series of state to action predictions. This is especially challenging due to credit assignment in the sequence of state to action mappings when receiving sparse rewards.

It is important to note the distinction between these learning task constructions in each of our surveyed applications. We further contextualize our surveyed applications with an overview of “Limitations of Deep Learning”. These limitations are non-trivial and present significant barriers for Deep Learning to fight COVID-19 related problems. Solutions to these issues of “Interpretability”, “Generalization metrics”, “Learning from limited labeled datasets”, and “Data privacy” will be important to many applications of Deep Learning. We hope describing how the surveyed COVID-19 applications are limited by these issues will develop intuition about the problems and motivate solutions. Finally, we conclude with a “Discussion” and “Conclusion” from our literature review. Our Discussion describes lessons learned from a comprehensive literature review and plans for future research.

Deep Learning for “Natural Language Processing” (NLP) has been extremely successful. Applications for COVID-19 include Literature Mining, Misinformation Detection, and Public Sentiment Analysis. Searching through the biomedical literature has been extremely important for drug repurposing. A success case of this is the repurposing of baricitinib [8], an anti-inflammatory drug used in rheumatoid arthritis. The potential efficacy of this drug was discovered by querying biomedical knowledge graphs. Modern knowledge graphs utilize Deep Learning for automated construction. Other biomedical literature search systems use Deep Learning for information retrieval from natural language queries. These Literature Mining systems have been extended with question answering and summarization models that may revolutionize search altogether. We additionally explore how NLP can fight the “infodemic” by detecting false claims and presenting evidence. NLP is also useful to evaluate public sentiment about the pandemic from data such as tweets and provide tools for social scientists to analyze free-text response surveys.

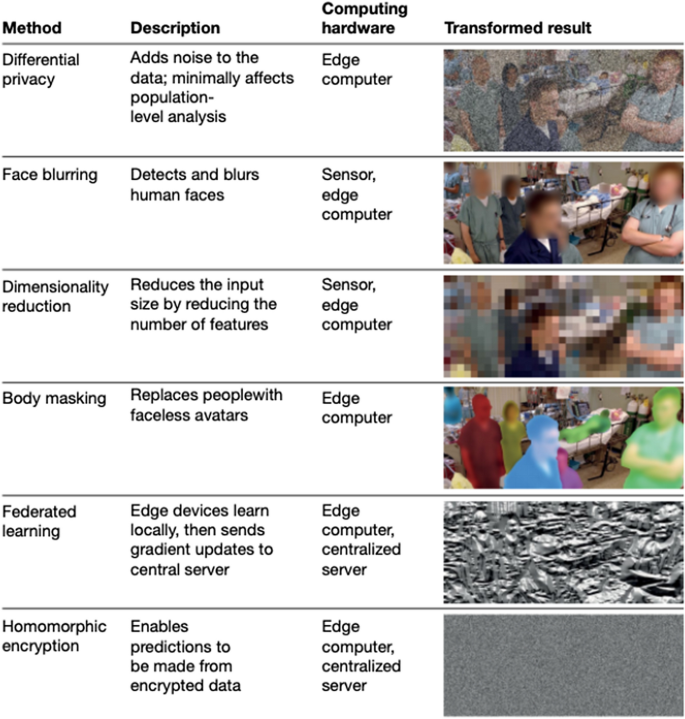

“Computer Vision” is another mature application domain of Deep Learning. The Transformer revolution in Natural Language Processing largely owes its success to Computer Vision’s pioneering into large datasets, massive models, and the utilization of hardware that accelerates parallel computation, namely GPUs [9]. Computer Vision applications to COVID-19 include Medical Image Analysis, Ambient Intelligence, and Vision-based Robotics. Medical Image Analysis has been used to supplement RT-PCR testing for diagnosis by classifying COVID-induced pneumonia from chest X-rays and CT scans. Haque et al. [10] recently published a survey on Computer Vision applications for physical space monitoring in hospitals and daily living spaces. They termed these applications “Ambient Intelligence”. This is an interesting phrase to encompass a massive set of more subtle applications such as automated physical therapy assistance, hand washing detection, or surgery training and performance evaluation. This section is particularly suited to our discussion on Data Privacy in “Limitations of Deep Learning”. We also look at how Vision-Based Robotics can ease the economic burden of COVID-19, as well as automate disinfection.

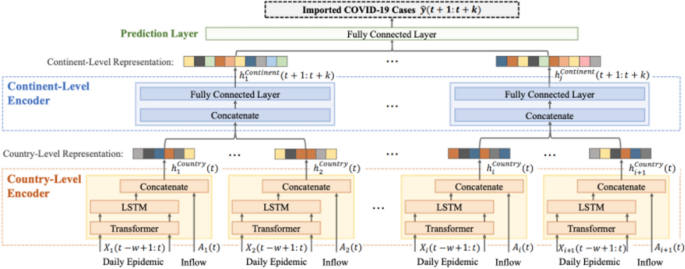

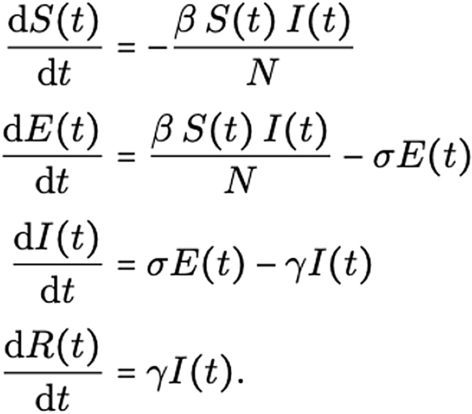

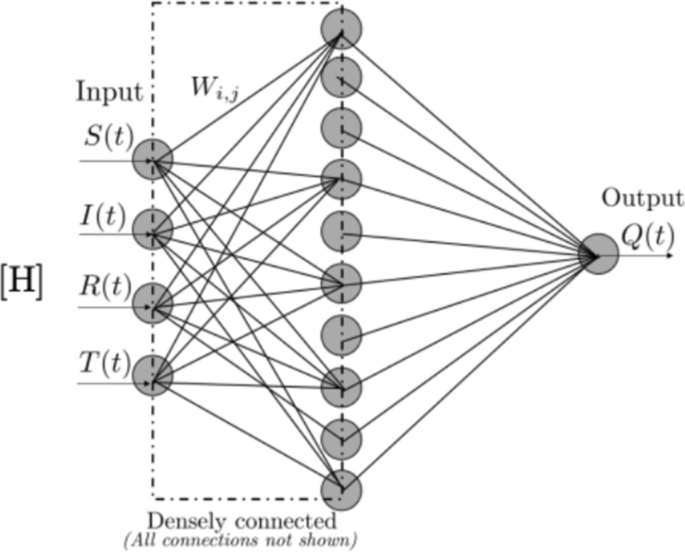

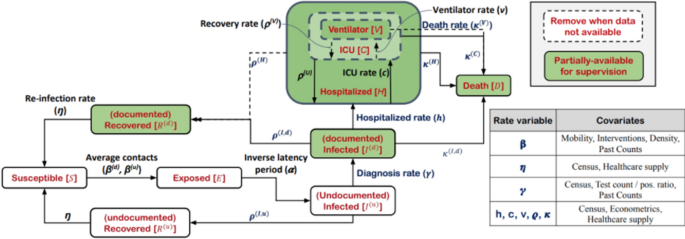

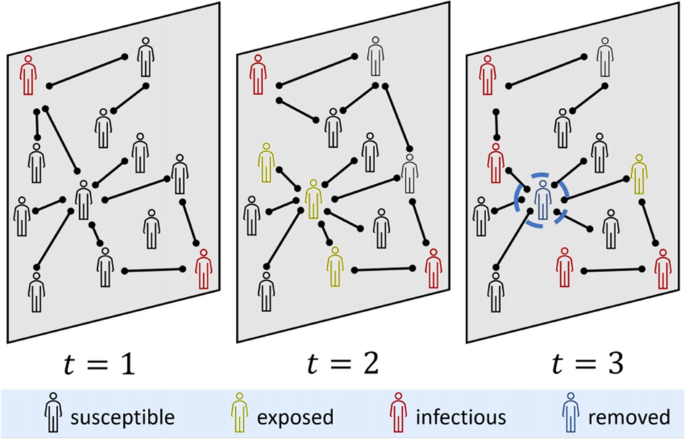

Deep Learning can improve virus spread models used in “Epidemiology”. Our coverage of these models starts with “black-box” forecasting. These models use a history of infections, as well as information such as lockdown phase, to predict future cases or deaths. We describe how this varies based on region specificity. We will then look at adding more structure to the population model. The most well-known example of this are Susceptible, Infected, and Recovered (SIR) models. The illustrative SIR model describes how a population transitions from healthy or “Susceptible”, to “Infected”, and “Recovered” through a set of three differential equations. These equations solve for the infection and recovery rates from data of initial and recovered populations. The challenge with these SIR models is that they have limiting assumptions. We will explore how Deep Neural Networks have been used to solve differential equations and integrate the non-linear impact of quarantine or travel into these SIR models. For even finer-grained predictions, we looked into the use of Contact Tracing, potentially enabling personalized risk of infection analysis.

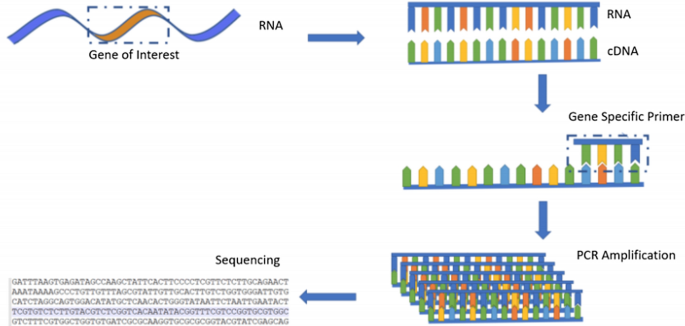

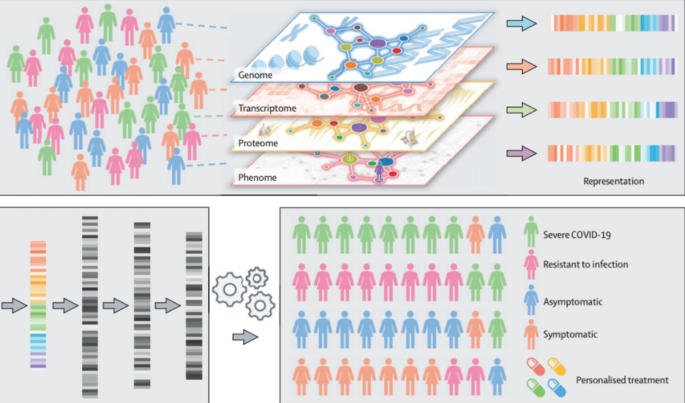

The application of Deep Learning for “Life Sciences” is incredibly exciting, but still in its early stages. RT-PCR has become the gold standard for COVID-19 testing. This viral nucleic acid test utilizes primers and transcription enzymes to amplify a chunk of DNA such that fluorescent probes can signal the presence of the viral RNA. However, these tests have a high false negative rate. We will look at studies that sequence this RNA and deploy deep classification models, use Computer Vision to process expression, as well as studies that design assays to cover a wide range of genomes. New diagnostic tools are being developed with detailed biological and historical information about each patient. This is known as Precision Medicine. Precision Medicine in COVID-19 applications looks at predicting patient outcome based on patient history recorded in Electronic Health Records (EHR), as well as miscellaneous biomarkers such as blood testing results. This is another section that is highly relevant for our cautionary “Limitations of Deep Learning” with respect to Data Privacy.

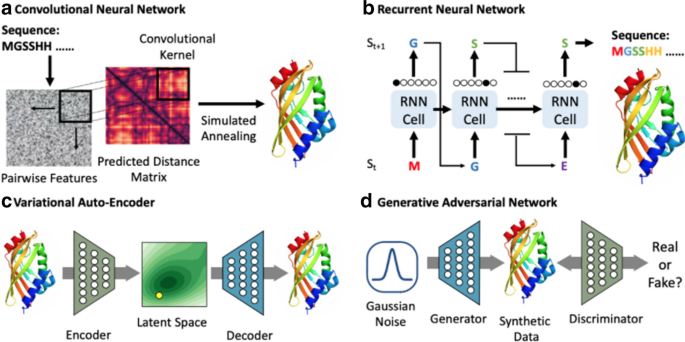

Another exciting application area is the intersection of Deep Learning and molecular engineering. Deep Learning has received massive press for the development of AlphaFold. Given the 1-dimensional string of amino acids, AlphaFold predicts the resulting 3-D structure. These models have been used to predict the 3-D structure of the spike proteins on the outer shell of the coronavirus, as well as its other proteins. Having a model of this structure allows biochemists to see potential binding targets for drug development. These bindings can prevent the virus from entering human cells through membrane proteins such as ACE2. We can use Deep Learning to suggest potential binding drug candidates. Developing new drugs will have to undergo a timely and costly clinical trial process. For this reason, COVID-19 research has been much more focused on drug repurposing to find treatments.

Within the scope of Natural Language Processing, we present the automated construction of biomedical knowledge graphs from a massive and rapidly growing body of literature. These graphs can be used to discover potential treatments from already approved drugs, an application known as drug repurposing. Drug repurposing is highly desirable because the safety profile of these drugs has been verified through a rigorous clinical trial process. Biomedical experts can search through these knowledge graphs to find candidate drugs. However, another interesting way to search through these graphs is to set up the problem as link prediction. Given a massive graph of nodes such as proteins, diseases, genes and edges such as “A inhibits B”, we can use graph representation learning techniques such as graph neural networks to predict relations between nodes in the graph.

Our final application area surveyed is the use of Deep Learning for “Epidemiology”. How many people do we expect to be infected with COVID-19? How long do we have to quarantine for? These are query examples for our search systems, described as NLP applications, but epidemiological models are the source of these answers. We will begin exploring this through the lens of “black-box” forecasting models that look at the history of infections and other information to predict into the future. We will then look at SIR models, a set of differential equations modeling the transition from Susceptible to Infected to Recovered. These models find the reproductive rate of the virus, which can characterize the danger of letting herd immunity develop naturally. To formulate this as a Deep Learning task, a Deep Neural Network approximates the time-varying strength of quarantine in the SIR model, since integrating the Exposed population would require extremely detailed data. Parameter optimizers from Deep Learning such as Adam [11] can be used to solve differential equations as well. We briefly investigate the potential of Contact Tracing data. Tracking the movement of individuals when they leave their quarantine could produce incredibly detailed datasets about how the virus spreads. We will explore what this data might look like and some tasks for Deep Learning. This is another application area that relates heavily to our discussion of data privacy in “Limitations of Deep Learning”.

These applications of Deep Learning to fight COVID-19 are promising, but it is important to be cognizant of the drawbacks to Deep Learning. We focus on the issues of “Interpretability”, “Generalization metrics”, “Learning from limited labeled datasets”, and “Data Privacy”. It is very hard to interpret the output of current Deep Learning models. This problem is further compounded by the lack of a reliable measure of uncertainty. When the model starts to see out-of-distribution examples, data points sampled from a different distribution than the data used to train the model, most models will continue to confidently misclassify these examples. It is very hard to categorize how well a trained model will generalize to new data distributions. Furthermore, these models can fail at simple commonsense tasks, even after achieving high performance on the training dataset. Achieving this high performance in the first place comes at the cost of massive, labeled datasets. This is unpractical for most clinical applications like Medical Image Analysis, as well as for quickly building question-answering datasets. Finally, we have to consider data privacy with these applications. Will patients feel comfortable allowing their ICU activity to be monitored by an intelligent camera? Would patients be comfortable with their biological data and medical images being stored in a central database for training Deep Learning models? This introduction should moderate enthusiasm about Deep Learning as a panacea to all problems. However, we take an optimistic look at these problems in our section “Limitations of Deep Learning”, explaining solutions to these problems as well, such as self-explanatory models or federated learning.

Background

This section will provide a background for this survey. We begin with a quick introduction to COVID-19, followed by what Deep Learning is and how it relates to other Artificial Intelligence technologies. Finally, we present the relationship of this survey with other works reviewing the use of Artificial Intelligence, Data Science, or Machine Learning to fight COVID-19.

SARS-CoV-2 originated from Wuhan, China and spread across the world, causing a global pandemic. The response has been a mixed bag of mostly chaos and a little optimism. Scientists were quick to sequence and publish the complete genome of the virus [12], and individuals across the world quarantined themselves to contain the spread. Scientists have lowered barriers for collaboration. However, there have been many negative issues surrounding the pandemic. The quick infection and lack of resources has overloaded hospitals and heavily burdened healthcare workers. SARS-CoV-2 has a unique characteristic of peak infection before symptom manifestation that has worked in the favor of the virus. Misinformation has spread so rampantly, a new field of “infodemiology” has sprouted to fight the “infodemic”. Confusion of correct information is compounded by a rapidly growing body of literature surrounding SARS-CoV-2 and COVID-19. This research is emerging very quickly and new tools are needed to help scientists organize this information.

A technical definition of Deep Learning is the use of neural networks with more than one or two layers. A neural network “layer” is typically composed of a parametric, non-linear transformation of an input. Stacking these transformations forms a statistical data structure capable of mapping high-dimensional inputs to outputs. This mapping is executed by optimizing the parameters. Gradient descent is the tool of choice for this optimization. Gradient descent works by taking the partial derivative of the loss function with respect to each of the parameters, and updating the parameters to minimize the loss function. Deep Learning gets the name “Deep” in reference to stacking several of these layers. The second part of the name, “Learning”, references parameter optimization. State-of-the-art Deep Learning models typically contain between 100 million to 10 billion parameters and 50–200 layers. Two of the largest models publicly reported are composed of 600 and 175 billion parameters [4, 13]. Scaling up the size of these models has accelerated extremely quickly in the past few years [14].

In application of data such as text, images, or molecular sequences, Deep Learning is a massive step up from Machine Learning. This is because Deep Learning learns these features automatically, compared to Machine Learning where features are manually-constructed. Machine Learning also describes fitting parametric models to map from input to output. Machine Learning processes inputs represented by human crafted features. A crafted feature to classify an animal as a dog or a zebra could be the weight of the animal, or the possession of stripes.

For high-dimensional data such as image pixel tensors or text embedding matrices, it is very hard to manually design high performing features for Machine Learning. Machine Learning is much different from Deep Learning, in which the features are learned automatically from this high-dimensional “raw” data. The features learned in Deep Learning are referred to as representations. A representation is typically analyzed through the penultimate vector that is inputted to the output prediction. It is very challenging to interpret this representation because of the non-linear interactions between variables that lead to it. For example, we cannot say the 3rd and 8th position of the representation vector solely look for the possession of stripes. In addition to looking at the penultimate layer vector output, the representation is also examined through the first embedding vector for word tokens. In either case, these high-dimensional vectors are commonly visualized through dimensionality reduction techniques such as t-SNE [15] or UMAP [16].

The entire set of intermediate neural network outputs can equally be considered as the representation of the data. It is very important to view representations in this way for the sake of transfer learning. This is where a neural network is trained on one task, usually one with a much larger set of labeled data, and then sequentially trained on another task. The difference between human-designed features and representation learned from raw data is the core distinction between Deep and Machine Learning.

Deep Learning is a piece in the bigger picture of Artificial Intelligence (AI). In addition to the distinction between Deep and Machine Learning, the scope of AI also includes Symbolic Systems. Symbolic Systems produce intelligent behavior through symbol manipulation and logic. Examples include Expert Systems and Knowledge Graphs. Expert Systems uses if-else rules to make decisions. Knowledge Graphs store relations between objects in graph data structures. The application of Knowledge Graphs is extremely useful for fighting COVID-19, an example of this is BenevolentAI’s Knowledge Graph [17]. This is done by searching through explicitly coded relations between proteins, drugs, and clinical trial observations, to name a few. Biomedical researchers use a structured query language, rather than natural language, to search through these graphs.

Deep Learning does not process information in the same way as Symbolic Systems. Rather than topological compositions of atomic units, Deep Learning stores information in distributed tensors. There is an interplay with Deep Learning in symbolic systems like Knowledge Graphs. Deep Learning is used to automate the construction of Knowledge Graphs through Named Entity Recognition and Relation Extraction tasks. Manually performing these tasks on big datasets such as a corpus of biomedical literature would be impossible. This automated Knowledge Graph construction is discussed heavily in our survey in application to drug repurposing.

We recommend readers explore Chollet’s Measure of Intelligence [18] for a definition of intelligence more generally. Intelligence is defined as a function of prior knowledge, experience, and generalization difficulty. This is a useful framework for thinking about the intelligence required with surveyed COVID-19 applications. What makes one application require more intelligence than another? How can we add more prior knowledge to these systems? How might this prior knowledge limit generalization ability? It is argued that we can trade off more prior knowledge for less experience, or vice-versa, we can start with less prior knowledge and make up for that with more experience. The success of these components are determined by the generalization difficulty of the task. Different kinds of prior knowledge injected into an artificial intelligence may limit generalization ability, as will different subsets of experience. The efforts of Deep Learning research can be thought of as discovering mechanisms of prior knowledge, collecting experience, and measuring generalization difficulty.

The current generation of Deep Learning is defined in our survey as sequential processing networks with many layers, updating its parameters with a global loss function, and forming distributed representations of data. We have seen an evolution from Machine Learning in representation learning. We also seek to integrate Symbolic Systems, such as the use of Knowledge Graphs. We think it is useful for readers to think of the interplay between prior knowledge, experience, and generalization difficulty to frame the difficulty of our surveyed applications.

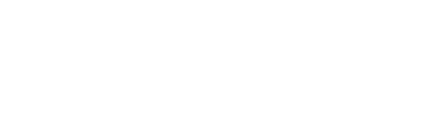

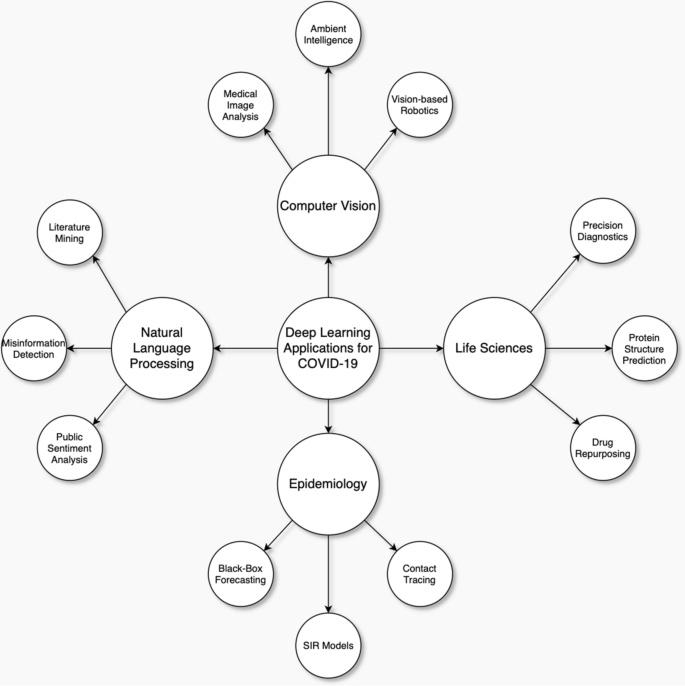

Many other researchers have surveyed the use of Artificial Intelligence to fight COVID-19. Our survey builds on these reports, with a more detailed dive into Deep Learning. Surveys covering AI, Data Science, or Machine Learning applied to COVID-19 vary mostly in how they organize COVID-19 applications. For example, Bullock et al. [5] organize their survey into molecular, clinical, and societal perspectives. Figure 1 illustrates how we have deviated from other surveys in presenting COVID-19 applications. Most notably, we do not cover the use of Deep Learning for audio data or the Internet of Things (IoT). Furthermore, applications we do not cover include the diagnostic potential of audio data from breathing recordings [6], and IoT applications such as smartphone temperature and inertial sensors [19]. Contrary to other surveys, we integrate publicly available datasets into our applications, rather than separate the two topics.

Bullock et al. [5] describe the aim of their survey as “not to evaluate the impact of the described techniques, nor to recommend their use, but to show the reader the extent of existing applications and to provide an initial picture and road map of how Artificial Intelligence could help the global response to the COVID-19 pandemic”. We have a similar aim in our survey, focusing solely on Deep Learning. Our survey draws heavy inspiration from Raghu and Schmidt’s paper, “A Survey of Deep Learning for Scientific Discovery” [20]. They cover different Deep Learning models, variants to the supervised learning training process, and limitations of Deep Learning, most notably reliance on large, labeled datasets. Our survey aims to provide a similar overview of Deep Learning and how it can be adapted to different kinds of scientific problems, focused on COVID-19.

Deep Learning applications for COVID-19

Natural Language Processing

We begin our coverage of Natural Language Processing (NLP) by describing how text data is inputted to Deep Neural Networks. In order to feed language as an input to a Deep Neural Network, words are first tokenized into smaller components and mapped to an index in an embedding table. We take a token such as “cat” and map it into a d-dimensional embedding vector, where d is described as the hidden dimension of the Deep Neural Network. For further illustration, the token “the” might be mapped to position “810” in an index table the size of the entire vocabulary. Each of these positions holds a d-dimensional embedding vector representing a unique token. Note this strategy can be used for any categorical variable input. This input representation has been very successful with language tokens. This strategy is used for other categorical variable encodings as well, such as amino acids tokens.

NLP has seen a boom of interest due to the invention of the Transformer Neural Network architecture [21]. This marks a transition from a focus on Recurrent Neural Networks (RNNs). RNNs iteratively process a sequence piece by piece, usually with explicit internal memory such as the Long Short-Term Memory (LSTM) models. The main attraction of the Transformer is the use of attention layers. The attention layer was invented to help RNNs preserve information from early tokens in the sequence. The famous paper “Attention is all you Need” [21], showed that attention layers are potent enough on their own to do away with recurrent sequence processing. Another benefit of this is the ability to massively parallelize the computation in the networks. The importance of this parallelization is best described with a quick history of AlexNet in Computer Vision.

The success of AlexNet [22] in the Computer Vision task of image classification was a large driver of interest in Deep Learning. AlexNet is an implementation of a Convolutional Neural Network, a new architecture at the time that has since been widely adopted. The forward and backward computation in Convolutional and Transformer Neural Networks can run in parallel. Parallelization enables massive computing acceleration from Graphics Processing Units (GPUs). A similar breakthrough has happened in NLP with Transformers. This perfect marriage with parallel GPU computing has dramatically improved Deep Learning performance.

Scaling up Transformers allows them to take advantage of big data, a necessary component of Deep Learning success described further in “Limitations of Deep Learning”. Another reason for the advancement of NLP is the success of self-supervised pre-training and transfer learning. It would be extremely challenging to find a big dataset of question-answer pairs related to COVID-19. However, we can find big data in the entire corpus of research published on SARS-CoV-2 and COVID-19. This data is not labeled. We cannot rely on supervised learning to learn representations from this data. The solution to this has been self-supervised language modeling. Language models mask out a token randomly and the model predicts what the masked token had originally been. The term “self-supervised” comes from the way this task can use supervised loss functions such as cross-entropy loss on the predicted token, but the task is constructed without human annotation.

After self-supervised language modeling on a large corpus, the model is transferred to a new task, such as Yelp review sentiment classification. The initialization of the neural network from the weights learned by language modeling is an incredibly powerful starting point. Gururang et al. [23] show the importance of in-domain data for this self-supervised pre-training. General-purpose language models such as BERT [24] or GPT [25] are trained on a massive corpus, such as all the text on Wikipedia, a massive set of books, and articles sourced from the internet. Language models repurposed for COVID-19 literature mining tasks such as BioBERT [26] or SciBERT [27] are pre-trained on a more domain-relevant corpus of scientific papers and biomedical literature. Another example, COVID-BERT [28] is pre-trained on a corpus of tweets about COVID-19. In-domain pre-training is extremely important for the success of transfer learning for COVID-19 NLP tasks. We will return to this theme in our discussion of Medical Image Analysis as well.

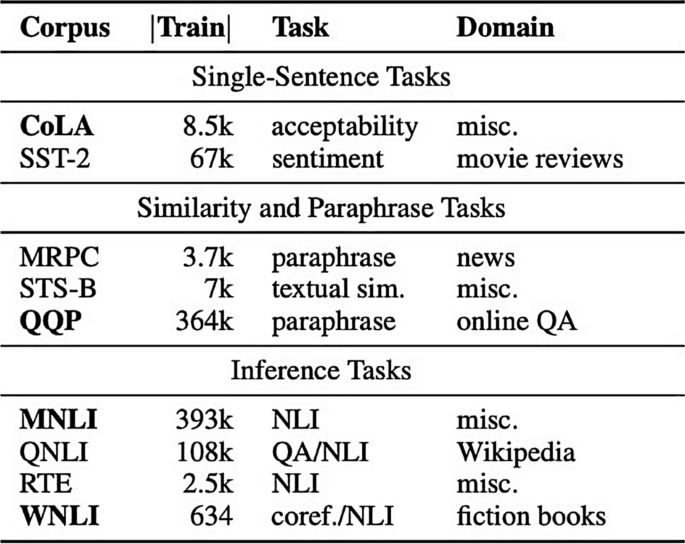

We present NLP applications for COVID-19 ordered by difficulty with respect to current Deep Learning systems. Figure 2 is a quick description of the GLUE NLP benchmark. This table gives information about each task such as how many training examples are in each dataset, a quick description of the task, and a high-level summary of the data domain [29]. The GLUE benchmark is a set of tasks to evaluate NLP systems. The latest NLP systems perform so well at these tasks that a new benchmark, SuperGLUE [30] has since been designed. Starting with the GLUE benchmark should provide readers a solid foundation for understanding what current NLP can easily solve. We explain how these tasks are setup as a Deep Learning problem to help readers understand the similarities of GLUE tasks with our surveyed COVID-19 applications. We will then transition to adapting these task formulations to COVID-19 applications.

What tasks has NLP conquered? A quick overview of the number of examples, tasks, and domains contained in the GLUE benchmark

The GLUE benchmark divides supervised learning tasks into categories of Single-Sentence, Similarity and Paraphrase, and Inference. These categories mostly distinguish the input format for classification tasks. Single-Sentence deals with one sentence as input, whereas similarity and inference deal with two. On the GLUE benchmark, this text is sentence-length sequences. The length of the sequence is an important distinction to make. Intuitively, it might seem easier to classify a longer sequence, such as an entire COVID-19 clinical report; however, attention over a long document has a much higher computational cost than sentence-length input sequences.

In the GLUE benchmark, single sentences are classified based on if they are grammatically acceptable or if they are positive or negative in sentiment. Text Classification applications in COVID-19 include certain approaches to Misinformation Detection, Public Sentiment Analysis, topic classification, and question category classification. Misinformation Detection and Public Sentiment Analysis research usually work with Twitter data. Tweets are great for NLP models since they are limited to 280 characters. An example of this is COVID-Twitter-BERT from Muller et al. [28].

Topic classification with scientific papers addressing COVID-19 requires constructing a heavily truncated atomic unit for papers. We cannot pass entire scientific papers as input to most NLP models. An application example of this is filtering out COVID-19 papers focused solely on Radiological findings from Liang and Xie [31]. Another example of this task is COVID-19 question classification from Wei et al. [32]. Wei et al. fine-tune BERT to categorize public questions about COVID-19 into categories such as transmission, societal effects, prevention, and more. This helps public officials understand what the public is concerned about with respect to COVID-19.

Similarity tasks in the GLUE benchmark are based on telling if two text sequences have the same semantic meaning. In GLUE, this is explored on miscellaneous data sources, news snippets, and questions asked on the Quora social network. In applications to Misinformation Detection, we might have a list of common rumors about COVID-19. We can use these semantic similarity models to detect when these rumors are being spread on social media. This detection is much more flexible than keyword models that would simply look for terms like “5G”. Semantic similarity models also play an enormous role in information retrieval systems.

Information retrieval models try to find the most relevant information given a query. This is executed by performing the same task, but at multiple stages of granularity. Generally, we will consider these stages of high recall and high precision as retrieval and re-ranking. Retrieval and re-ranking could be further decomposed to trade-off between higher precision and more computation. The first retrieval stage has typically been done by hand-crafted TF-IDF and BM25 text features. Only very recently has information retrieval looked at transformer-derived representations for the first retrieval stage [33]. The second stage of re-ranking takes the retrieved documents as input and assigns a more precise relevant score for each document, sorting them by the highest score to answer the query.

The next task in NLP relevant to COVID-19 applications is question answering (QA). QA has been well studied on the SQuAD benchmark [34]. This formulation of question answering (QA) is referred to as extractive QA. The model has to classify the answer as a start and end span within a provided chunk of context. The supervised learning problem is to output the indices from the context. For COVID-19 extractive question answering, an important question is, how do we get the context to classify the answer in? One solution would be to pair extractive QA with the information retrieval systems previously described to provide context to find the answer span. In addition to SQuAD-style questions, we look at complex question answering. Complex, or multi-hop, question answering requires the model to combine information from different sources to derive the answer.

Some approaches to QA surveyed generate the answer directly [35], rather than classifying its presence in a context. We consider this formulation to be more akin to abstractive question answering. We will explore two approaches to QA, either using symbolic knowledge graphs or relying on a neural system. A symbolic knowledge graph is desirable for the sake of interpretability. However, manual relation labeling in the COVID-19 literature does not scale. Neural systems still play a role in constructing these knowledge graphs through Named Entity Recognition and Relation Extraction tasks. The alternative approach to using symbolic knowledge graphs is to rely solely on Deep Learning models. In this case, a Deep Learning model stores all the information it needs to answer questions in its parameters [36]. We refer readers to [37] for current work on Knowledge Intensive Tasks in NLP.

Another NLP task that is interesting for COVID-19 applications is abstractive question answering, summarization, and chatbots. These tasks require the model to devise novel sequences of text to answer questions, summarize articles, or chat with a user. We view each of these application areas as facing the same problem, differing with respect to the size of the input and output. All of these applications may be expected to contain a massive amount of information, whether that information is accessed directly in the parameters of the model or in the input context window. We note that these types of models are still in the early stage of development. We survey Abstractive QA and summarization models implemented in Literature Mining systems such as CO-Search [38] and CAiRE-COVID [39].

The ambition of NLP tasks in COVID-19 research range on the scale from well studied GLUE-style problems to extractive question answering when the context is provided, and then up to chatbots and abstractive summarization. We note that at the time of this publication, reliable abstractive summarization is a moonshot application of Deep Learning. Artificial Intelligence is an exciting and imagination-provoking technology. The results from GPT-3, a 175 billion parameter language model, has been extremely inspiring. COVID-19 researchers are certainly pushing the limits of what NLP can do.

Literature Mining

The COVID-19 pandemic ignited a call to arms of scientists across the world. Consequentially, searching for signal in the noise is more challenging. The most popular open literature dataset, CORD-19 [40], contains over 128,000 papers. These papers contain information about SARS-CoV-2, as well as related coronaviruses such as SARS and MERS, information about COVID-19, and relevant papers in relation to drug repurposing. No single or group of human beings could be expected to read this amount of text. The need to organize a massive scale of text data has inspired development of many NLP systems.

CORD-19: The COVID-19 Open Research Dataset [40] is the most popular dataset containing this growing body of literature. The dataset consists of data from publications and preprints on COVID-19, as well as historical coronaviruses such as SARS and MERS. These papers are sourced from PubMed Central (PMC), PubMed, the World Health Organization’s COVID-19 database, and preprint servers such as bioRxiv, medRxiv, and arXiv. The CORD-19 research paper documents the rapid growth of their dataset from an initial release of 28,000 papers when published on April 22nd, up to 140,000 when revised on July 10th. We observed documentation of this explosive growth as well when surveying Literature Mining systems built on top of this dataset. The CORD-19 data is cleaned and implemented with the same system used for the Semantic Scholar Open Research Corpus [41].

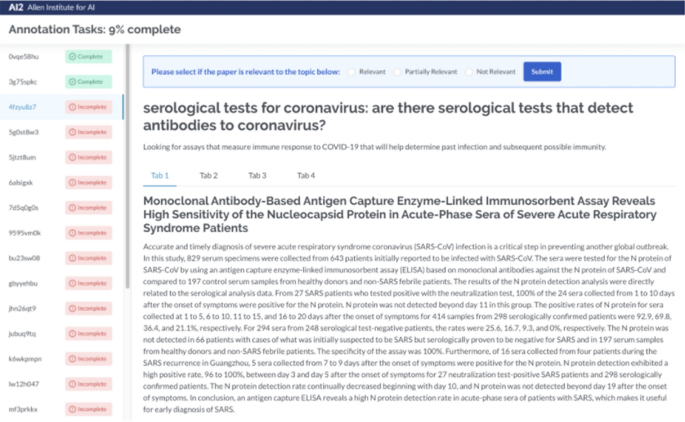

Whereas CORD-19 is general purpose, TREC-COVID [42] is more narrowly focused on a test evaluation of information retrieval. The authors state the twin goals of the dataset are “to evaluate search algorithms and systems for helping scientists, clinicians, policy makers, and others manage the existing and rapidly growing corpus of scientific literature related to COVID-19 and to discover methods that will assist with managing scientific information in future global biomedical crises” [43]. The TREC-COVID dataset consists of topics where each topic is composed of a query, question, and narrative. The narrative is a longer description of the question. Figure 3 shows an example of the interface the authors use to label the TREC-COVID dataset.

Interface for human labeling TREC-COVID documents

The following list provides a quick description of some Literature Mining systems built from datasets such as CORD-19 and TREC-COVID. These systems use a combination of Information Retrieval, Knowledge Graph Construction, Question Answering, and Summarization to facilitate exploration into the COVID-19 scientific literature.

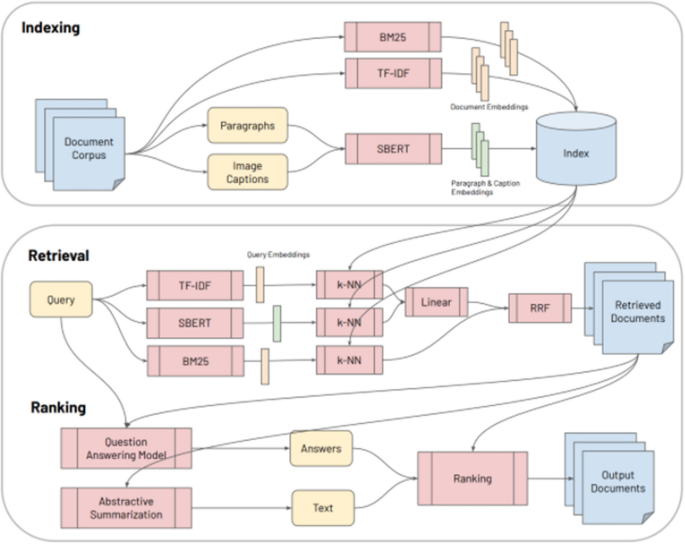

- CO-Search [38] is a Retrieve-then-Rank system composed of many parts, shown in Fig. 4. Before answering any user queries, the entire document corpus is encoded with Sentence-BERT (SBERT) [44], TF-IDF, and BM25 features. A user enters a query and it is encoded with a similar combination of featurizers. This query encoding is used to index the featurized documents and thus return the most semantically similar documents to the query. Having retrieved these documents, the next task is ranking for presentation to the user. First, the retrieved documents and query are passed as input to a multi-hop question answering model and an abstractive summarization system. The output from these models are weighted with the original scoring from the retrieval step, and the top scoring documents are presented to answer the query.CO-Search is a combination of many cutting-edge NLP models. Their pre-training task for the SBERT encoder is very interesting. The authors train SBERT to take a paragraph from a research paper and classify whether it cites another paper, given only the title of the other paper. SBERT is a siamese architecture which takes one sequence as input at a time. SBERT uses the cosine similarity loss between the output representation of each separately encoded sequence to compare the paragraph and the title. The representation learned from this pre-training task is then used to encode the documents and queries, as previously describe. We will unpack the question answering and abstractive summarization systems later in the survey.

Fig. 4

(Image taken from Esteva et al. [38])

CO-Search System Architecture

- Covidex [45] is a Retrieve-then-Rank system combining keyword retrieval with neural re-ranking. The most different aspects of Covidex as compared to CO-Search are a sequence-to-sequence (seq2seq) approach to re-ranking and bootstraps the training of this model from the MS MARCO [46] passage ranking dataset. The MS MARCO dataset contains 8.8M passages obtained from the top 10 results by the Bing search engine, corresponding to 1M unique queries. The monoT5 [47] seq2seq re-ranker takes as input “Query q: Document: d Relevant: “ to classify whether the document is relevant or not to the query.

- SLEDGE [48] deploys a similar pipeline of keyword-based retrieval followed by neural ranking. Differently from Covidex, SLEDGE utilizes ths SciBERT [27] model for re-ranking. SciBERT is an extension to BERT that has been pre-trained on Scientific Text. Additionally, the authors of SLEDGE find large gains by integrating the publication date of the articles into the input representation.

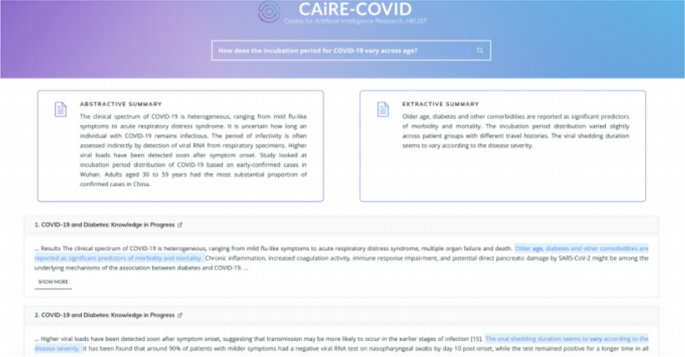

- CAiRE-COVID [39] is a similar system to CO-Search. The primary difference comes from the use of the MRQA [49] model and avoiding fine-tuning the QA models on COVID-19 related datasets. This system tests the generalization of existing QA models comprising of a pre-trained BioBERT [26] fine-tuned on the SQuAD dataset. The user interface of CAiRE-COVID is depicted in Fig. 5

Fig. 5

User Interface for the CAiRE-COVID Literature Mining system consisting of Extractive Summary, Abstractive Summary, and most relevant documents

The preceding list are examples of Information Retrieval (IR) systems. As mentioned previously, IR describes the task of finding relevant documents from a large set of candidates given a query. These systems typically deploy multi-stage processing to break up the computational complexity of the problem. The first stage is the retrieval stage, where a set of documents much smaller than the total set is returned that best match the query. This first stage of retrieval has only recently integrated the use of neural representations. As previously listed, many systems combine TF-IDF or BM25 sparse vectors with dense representations derived from SBERT. The relationship between these representations is well stated in Karpukhin et al. [33], “the dense, latent semantic encoding of contexts and questions is complementary to the sparse vector representation by design”. This describes how some queries benefit massively from keyword features, whereas others need the contextual information captured in SBERT-style representations.

Deep Neural Networks can be viewed as compression machines for the sake of semantic nearest neighbor retrieval. They take high-dimensional inputs such as the matrix embedding of a paragraph and gradually compress it into a much lower dimensional vector. BERT takes in a sequence of 512 tokens and embeds it into a matrix of size (512 × 768), 768 being the hidden dimension used in the BERT-base release. BERT then processes this matrix through 12 transformer blocks, eventually connecting it to fully-connected layers that end up with an output vector corresponding to each token index. In the BERT architecture, the final vector is indexed at the beginning, [CLS] token. This final vector is the representation of the original sequence of 512 tokens. The SBERT architecture, used in the listed systems, has a more explicit aggregation for the final output vector, rather than indexing the [CLS] token.

In the first stage of Neural Retrieval, we would like to use these vector representations to find the most similar documents to our query. BERT is very successful at pair-wise regression tasks. This is where two sequences are passed in as input, separated by a [SEP] token. The cross-sequence attention in BERT classifies the relationship of the sequences. This is the setup for tasks like Semantic Text Similarity (STS), Natural Language Inference (NLI), and Quora Question Pairs (QQP). However, this setup is costly for information retrieval, passing in each query and document to get a similarity classification would require quadratic comparisons.

Sentence-BERT (SBERT) begins the transition to Transformer-based neural retrieval. SBERT uses a siamese architecture that avoids the pairwise bottleneck of BERT. Siamese architectures describe passing two inputs separately through a neural network and comparing the output representations. Each SBERT “tower” takes a single sequence as input and is trained with a cosine similarity loss. SBERT is then used to encode the documents from a database. Nearest neighbor GPU index optimizations [50] are extremely fast at finding the most similar representations to a query embedding. This is a significant improvement because the semantics contained in these representations are much better than TF-IDF or BM-25 features. The second stage is the refining or re-ranking of initially matched documents. The second stage faces a much smaller set of total documents than initial retrieval and the bottleneck of pairwise models is negligible.

When asking a question about COVID-19, we might not want to be redirected to a list of articles to answer our question. We desire intelligent systems that can directly answer our question. This is the challenge of Question Answering. Tang et al. [51] describe the challenge of constructing datasets for COVID-19 QA in a similar format as the SQuAD dataset. Five annotators working on constructing this dataset for 23 hours resulted in 124 question-article pairs. This includes deconstruction of topics such as “decontamination based on physical science” into multiple questions such as “UVGI intensity used for inactivating COVID-19” and “Purity of ethanol to inactive COVID-19”. The authors demonstrate the zero-shot capabilities of pre-trained language models on these questions. This is an encouraging direction as Shick and Shutze [52, 53] have recently shown how to perform few-shot learning with smaller language models.

Knowledge Graph Construction

One of the best mechanisms of organizing information is the use of Knowledge Graphs. Figure 6 is an example of a Knowledge Graph of our surveyed Deep Learning applications for COVID-19. Each relation in this example is A “contains” B. This is an illustrative example of organizing information topologically. It is often easier to understand how complex systems work or ideas connect when explicitly linked and visualized this way. We want to construct these kinds of graphs with all of the biomedical literature relevant to COVID-19. This raises questions about the construction and usability of knowledge graphs. Can we automatically construct these graphs from research papers and articles? At what scale is this organization too overwhelming to look through?

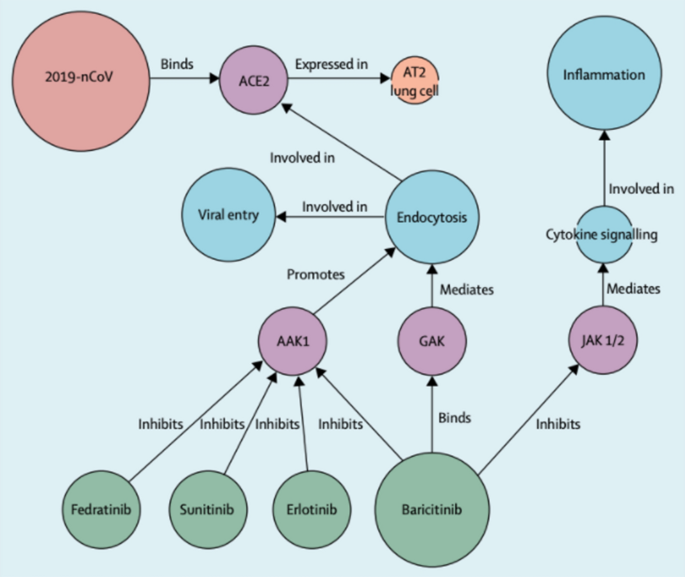

In application to COVID-19, we would like to construct Biomedical Knowledge Graphs. These graphs capture relations between entities such as proteins and drugs and how they are related such as “chemical A inhibits the binding of protein B”. Richardson et al. [8] describe how they use the BenevolentAI knowledge graph to discover baricitinib as potential treatment for COVID-19. In this section, we focus on how NLP is used to construct these graphs. Under our “Life Sciences” section, we will discuss the potential use of graph neural networks to mine information from the resulting graph-structured data.

Figure 7 shows examples of different nodes and links. We see the 2019-nCoV node (continually referred to as COVID-19 in our survey), the ACE2 membrane protein node, and the Endocytosis cellular process node, to name a few. The links describe how these different nodes are related such as 2019-nCoV “Binds” ACE2, ACE2 “Expressed in” AT2 lung cell. Richardson et al. [8] describe how this structure allows them to query the graph and form hypotheses about approved drugs. The authors originally have the hypothesis that the AAK1 protein is one of the most known regulators of endocytosis and that disruption of this might stop the virus from entering the cells. The knowledge graph returns 378 AAK1 inhibitors, 47 having been approved for medical use and 6 that have inhibited AAK1 with high affinity. However, the knowledge graph shows that many of these compounds have serious side-effects. baricitinib, one of the 6 AAK1 inhibitors, also binds another kinase that regulates endocytosis. The authors reason that this can reduce both viral entry and inflammation in patients. This is further described in Stebbing et al. [54].

BenevolentAI Knowledge Graph used to suggest baricitinib as a treatment for COVID-19

We note that this kind of traversal of the Knowledge Graph requires significant prior knowledge on the part of the human-in-the-loop. This motivates our emphasis on Human-AI interaction in “Limitations of Deep Learning”. Without human accessible interfaces, the current generation of Artificial Intelligence systems are useless. We will describe how Deep Learning, rather than expert querying, can be used to mine these graphs in our section on “Life Sciences”. Within the scope of NLP, we describe how these graphs are constructed from large datasets. This is referred to as Automated Knowledge Base Construction.

A Knowledge Graph is composed of a set of nodes and edges. We can set this up as a classification task where Deep Learning models are tasked to classify nodes in a body of text. This task is known as Named Entity Recognition (NER). In addition to identifying nodes, we want to classify their relation. As a supervised learning task, these labels include the set of edges we want our Knowledge Graph to contain, such as “inhibits” or “binds to”. Deep Learning serves classify nodes and edges according to a human-designed set, not to define new nodes and relations. Describing their system called PaperRobot, built prior to the pandemic outbreak, Wang et al. describe that “creating new nodes usually means discovering new entities (e.g. new proteins) through a series of laboratory experiments, which is probably too difficult for PaperRobot” [55].

The labeled nodes are linked together with an entity ontology defined by biological experts. Defined by Guarino et al., “computational ontologies are a means to formally model the structure of a system, i.e., the relevant entities and relations that emerge from its observation, and which are useful to our purposes” [56]. Wang et al. [57] link the MeSH IDs entities together based on the Comparative Toxicogenomics Database (CTD) ontology. From Davis et al. [58], “CTD is curated by professional biocurators who leverage controlled vocabularies, ontologies, and structured notation to code a triad of core interactions describing chemical-gene, chemical-disease and gene-disease relationships”.

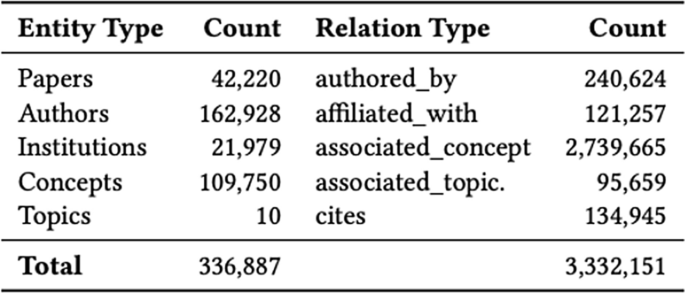

Wise et al. [59] construct a similar graph containing 336,887 entities and 3,332,151 relations. The set of nodes and edges are shown in Fig. 8. The authors use a combination of graph and semantic embeddings to answer questions with the top-k most similar articles, similar to the Information Retrieval systems previously described. Hosted on http://www.CORD19.aws, their system has seen over 15 million queries across more than 70 countries.

Meta-data on the count of Entities in CKG and Relation information

Zeng et al. [61] describe the construction of a more ambitious Knowledge Graph from a large scientific corpus of 24 million PubMed publications. Their graph contains 15 million edges across 39 types of relationships connecting drugs, diseases, proteins, genes, pathways, and expression. From their graph they propose 41 repurposable drugs for COVID-19. Chen et al. [62] present a more skeptical view of NER for automated COVID-19 knowledge graph construction. They highlight that even the more in-domain BioBERT model has not been trained on enough data to recognize entities such as “Thrombocytopenia”, or even “SARSCOV-2”. They instead use a co-occurrence frequency to extract nodes and use word2vec [63] similarity to filter edges.

In SciSight [64], the authors design a knowledge graph that is integrated with the social dynamics of scientific research. They motivate their approach highlighting that most of these Literature Mining systems are designed for targeted search. Targeted search is defined as search where the researchers know what they are looking for. SciSight is designed for exploration and discovery. This is done by the construction of knowledge graph of topics as well as the social graphs of the researchers themselves.

Misinformation Detection

The spread of information related to SARS-CoV-2 and COVID-19 has been chaotic, denoted as an infodemic [65]. From conspiracy theories ranging from causal attribution of 5G networks to false treatments and reporting of scientific information, how can Deep Learning be used to fight the infodemic? In our survey we will look at this under the lens of the spread of misinformation and the detection of it. The detection of misinformation has been formulated as a text classification or semantic similarity problem. Our original description of the GLUE benchmark should help readers understand the core Deep Learning problem in the following surveyed experiments.

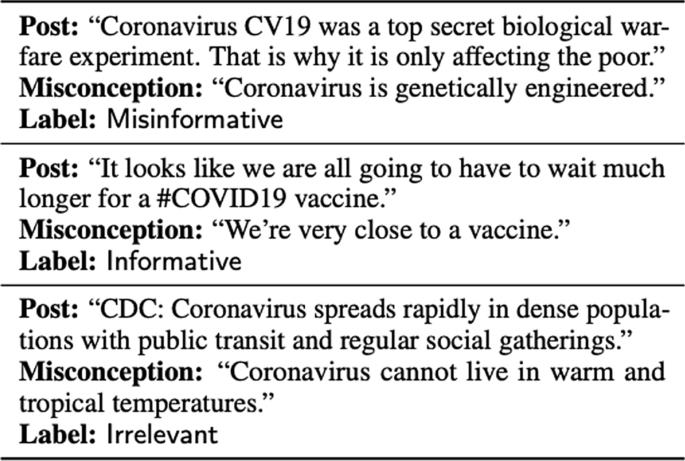

Many studies have built classification models to flag tweets potentially containing misinformation. These papers mostly differ in how they label these tweets. Alam et al. [66] label tweets according to 7 question labels; contains a verifiable factual claim, is likely to contain false information, is of interest to the general public, is potentially harmful to a person, a company, a product, or society, requires verification by a fact-checker, poses a specific kind of harm to society, and requires the attention of a government entity. Dharawat et al. [67] look at the seriousness of misinformation, reasoning that “urging users to eat garlic is less severe than urging users to drink bleach”. Their Covid-HeRA dataset contains 61,286 tweets labeled as not severe, possibly severe, highly severe, refutes/rebuts, and real news/claims. Hossain et al. [68] collaborate with researchers from the UCI school of medicine to establish a set of common Misconceptions. These misconceptions are used to label Tweets. Examples of this are shown in Fig. 9.

Examples of Misinformation Labels

The detection of misinformation and fact verification has been studied before the COVID-19 infodemic. The most notable dataset of this is the FEVER, Fact Extraction and Verification, dataset [70]. This dataset contains 185,445 claims generated by human annotators. The annotators of the dataset were presented an introduction section of a Wikipedia article and asked to generate a factual claim and then perturb that claim such that it is no longer factually verified. We refer readers to their paper to learn about additional challenges of constructing this kind of dataset [70].

Constructing a new dataset that properly frames a new task is a common theme in Deep Learning. Wadden et al. [71] construct the SciFact dataset to extend the ideas of FEVER to COVID-19 applications. This is different from classification task formulations previously mentioned in that they are more creative in task design. SciFact and FEVER introduce new datasets that show how supervised learning can tackle Misinformation Detection. Wadden et al. [71] design the SciFact dataset to not only classify a claim as true or false, but to provide supporting and refuting evidence as well. Wadden et al. [71] deploy a clever annotation scheme of using “citances”, sentences in scientific papers that cite another paper, as examples of supporting or refuting evidence for a claim. Examples of this are shown in Fig. 10. The baseline system they deploy to test the dataset is an information retrieval model. We refer readers to our previous section on Literature Mining to learn more about these models.

COVID-19 claim examples about COVID-19 and corresponding evidence retrieved

The authors of SciFACT and FEVER baseline their datasets with neural information retrieval systems. Their systems rely on sparse feature vectors such as TF-IDF and distributed representations of knowledge implicitly stored in neural networks weights. In the previous section we described Knowledge Graphs. Knowledge Graphs for fact verification may be more reliable than neural network systems. We could additionally keep an index of sentences and passages that resulted in relations added to the knowledge graph. This could facilitate evidence explanations. The primary difference with this approach is the extent of automation. Querying Knowledge Base and sifting through relational evidence requires much more human-in-the-loop interaction than neural systems. This approach is also bottlenecked by the problem of scale with evidence. We will continue to compare the prospects of Knowledge Graphs and Neural Systems in our “Discussion” section.

Public Sentiment Analysis

The uncertainty of COVID-19 and the challenge of quarantine ignited mental health issues for many people. NLP can help us gauge how the public is faring from multiple angles such as economic, psychological, and sociological analysis. Are individuals endorsing or rejecting health behaviors which help reduce the spread of the virus? Previous studies have looked at the use of Twitter data for election sentiment [72, 73]. This section covers extensions of this work looking into aspects of COVID-19.

Twitter is one of the primary sources of data for Public Sentiment Analysis. Other public data sources include news articles or Reddit. Tweet classification is very similar to the Single-Sentence GLUE benchmark [29] tasks previously described. Each Tweet has a maximum of 280 characters, which will easily fit into Transformer Neural Networks. Compared with Literature Mining, there is no need to carefully construct atomic units for Tweets.

The core question with Twitter data analysis is filtering of the extracted Tweets into categories. This is usually done by keyword matching. Muller et al. [28] use the keywords “wuhan”, “ncov”, “coronavirus”’, “covid”, “sars-cov-2”. Filtering by these keywords over a span from January 12th to April 16th, 2020 resulted in 22.5 M collected tweets. The authors use this dataset to train COVID-Twitter-BERT with self-supervised language modeling. Previously, we discussed the benefit of in-domain data for self-supervised pre-training detailed in [23]. COVID-Twitter-BERT is then fine-tuned for five different Sentiment classification datasets. Two of these datasets target Vaccine Sentiment and Maternal Vaccine Stance. Compared to fine-tuning the BERT [24] model pre-trained on out-of-domain data sources such as Wikipedia and books, fine-tuned COVID-Twitter-BERT models achieve an average 3% improvement across 5 classification tasks. The average improvement was brought down by a very small improvement on the Stanford Sentiment Treebank 2 (SST-2) dataset, which does not consist of Tweets. This further highlights the benefits of in-domain self-supervised pre-training for Natural Language Processing, and more broadly, Deep Learning applications.

Nguyen et al. [74] construct a dataset of 10K COVID tweets. These tweets are labeled as to whether they provide information about recovered, suspected, confirmed, and death cases, as well as location or travel history of the cases, or if they are uninformative altogether. This dataset was used in the competition WNUT-2020 Task 2: Identification of Informative COVID-19 English Tweets. Chauhan [75] describes the efficacy of data augmentation to prevent over-reliance on easy clues such as “deaths” and “died” to identify informative tweets. Sancheti et al. [76] describe the use of semi-supervised learning for this task, finding benefits from leveraging unlabeled tweets.

Loon et al. [77] explore the notion that SARS-CoV-2 “has taken on heterogeneous socially constructed meanings, which vary over the population and shape communities’ response to the pandemic.” They tie Twitter data with Google COVID-19 Community Mobile Reports [78] and find that political sentiment can predict how much people are social distancing. They find that residents social distanced less in areas where the COVID sentiment endorsed concepts of fraud, the political left, and more benign illnesses.

Further exploration into Deep Learning components such as architecture design, loss functions, or activations [79] will not be as important as dataset curation. With a strong dataset, text classification is an easy task for cutting-edge Transformer models. Later on, we will look at the Limitations of Deep Learning that highlight what makes this task challenging from a perfect performance perspective. Another topic in our coverage of limitations is the importance of Human-AI interaction. This is relevant for all applications discussed, but especially for public sentiment with respect to user interfaces. Public sentiment is typically interpreted by economists, psychologists, and sociologists who may not be comfortable adding desired functionality to a PyTorch [80] or Tensorflow [81] codebase. We emphasize the importance of user interfaces that allow users to integrate Public Sentiment Analysis into free-text answers in surveys.

Computer Vision

Computer Vision, powered by Deep Learning, has a very impressive resume. In 2012, AlexNet implemented a Convolutional Neural Network with GPU optimizations. AlexNet set its sights on the ImageNet dataset. The result significantly past the competition with a 63.3% top-1 accuracy. This marked a large improvement from 50.9% with manually engineered image features. AlexNet inspired further interest in Deep Neural Networks for Computer Vision. Researchers designed new architectures, new ways of representation learning from unlabeled data, and new infrastructure for training larger models. In 2020, eight years after AlexNet, the Noisy Student EfficientNet-L2 model reached 88.4% top-1 accuracy, an absolute improvement of 25.1%. The Deep Computer Vision resume continues with generation of photorealistic facial images, transferring artistic style from one image to another, and enabling robotic control solely from visual input.

The success of Deep Computer Vision is largely attributed to the ImageNet dataset [82]. The ImageNet competition is a dataset that contains 1.2 million images labeled in 1,000 categories. Images are inputted to Deep Neural Networks as tensors of the dimension height × width × channels. For example, most ImageNet images have the dimension 128 × 128 × 3 pixels. The resolution of image inputs to Deep Learning is an important consideration for the sake of computational and storage cost.

Computer Vision stands to transform Healthcare in many ways. The most salient and frequently discussed application to COVID-19 is medical image diagnosis. Deep Learning has performed extremely well at medical image diagnosis and has underwent clinical trials across many diseases [83]. Medical image tasks mostly consider classification and segmentation, as well as reconstruction from 2-D slices in a CT-scan to make up the final 3-D view.

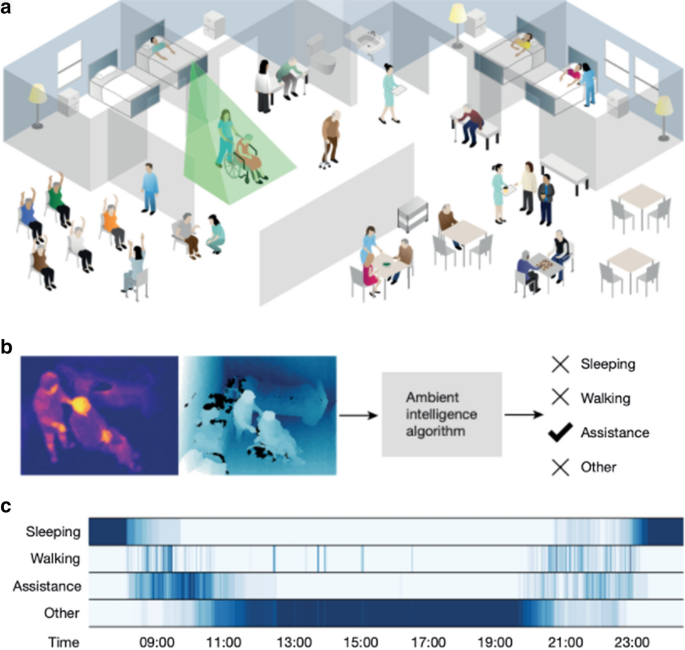

Computer Vision also stands to aid in subtle hospital operations. Haque et al. [10] describe “Ambient Intelligence” where Computer Vision aids in physical therapy to combat ICU-acquired weakness, ensures workers and patients wash their hands properly, and improves surgical training, to name a few. Computer Vision equips cameras to “understand” what they are recording. They can identify people. They can label every pixel of a road for the sake of self-driving cars. They can map satellite images into road maps. They can imagine what a sketch drawing would look like if it was a photographed object. Here we use “understand” for the sake of hyperbole, really meaning that it can answer semantic questions about image or video data. These applications describe the potential of Computer Vision to enable a large set of subtle applications in pandemic response, such as face mask detection and monitoring social distancing or hospital equipment inventory. We hope to excite readers about the implications and gravity of this technology; however, in “Limitations of Deep Learning” we highlight “Data Privacy” issues with this kind of surveillance.

Economic damage is one of the greatest casualties of COVID-19. How can we engineer contact-free manufacturing and supply chain processes that don’t endanger workers? Vision-based robotics is a promising direction for this. Current industrial robotics rely on solving differential equations to explicitly control desired movement. This works great when the input can be extremely controlled, but breaks down when the robot must generalize to new inputs. Vision-based robotics offers a more general control solution that can adapt to novel orientations of objects or weight distributions of boxes.

Medical Image Analysis

Assisting and automating Medical Image Analysis is one of the most commonly discussed applications of Deep Learning. These systems are improving rapidly and have moved into clinical trials. We refer readers to Topol’s guidelines for AI clinical research, exploring lessons from many clinical fields [84]. Our coverage of Medical Image Diagnosis for COVID-19 is mostly focused on classification of COVID-19 pneumonia, viral pneumonia, or healthy chest radiographs. There are many studies that focus on the Semantic Segmentation task, where every pixel in an image is classified. We discuss the Semantic Segmentation application in our section on “Interpretability” with respect to improving Human-AI Interaction. In our analysis, chest radiographs are sourced from either X-ray imaging or higher-resolution CT scans. Motivating the use of radiograph diagnosis, Fang et al. [1] find a 98% sensitivity with CT detection compared to 71% with RT-PCR. Ai et al. [85] look at the correlation between Chest CT and RT-PCR testing for 1014 patients concluding that “chest CT may be considered as a primary tool for the current COVID-19 detection in epidemic areas” [85]. Das et al. [86] highlight some reasons to prefer chest X-rays over CT-scans, namely that they are cheaper and more available, and they have lower ionizing radiation than CT scans do.

In our section on “Learning from Limited Labeled Datasets”, we will further discuss the challenge of fitting Deep Learning models with relatively small datasets. Medical image analysis may be the best example of this. Compared to the 1.4 million images in ImageNet, we rarely have more than 1000 COVID-19 positive chest radiographs. This is especially important in a pandemic outbreak situation, where we need to gather diagnostic information as fast as possible. Researchers have turned to variants of supervised learning as the solution to this problem. This includes transfer, self-supervised, weakly supervised, or multi-task learning.

Fortunately for this application, there are plenty of existing datasets that seek to classify pneumonia from Chest radiographs and can be used to bootstrap representations learned for COVID-19 detection. Irvin et al. [7] constructed the Chexpert dataset of 224,316 chest radiographs of 65,240 patients prior to the COVID-19 outbreak. It is important to differentiate between COVID-19 and viral pneumonia, rather than thinking about this problem through the lens of COVID-19 vs. healthy. For an example of the dataset sizes available, Wenhui et al. [87] train their model on a dataset of 1341 normal, 1345 viral pneumonia, and 864 COVID-19 images. Wang et al. [42] published the COVIDx dataset with 13,975 CXR images across 13,870 unique patients. However, this dataset only contains 358 CXR images from 266 COVID-19 patient cases. We refer readers to [88,89,90] to review common approaches to Deep Learning with class-imbalanced datasets.

Our literature review reveals that many of these studies pre-train models on the ImageNet dataset and then fine-tune them on the COVIDx dataset This representation learning strategy is known as transfer learning. Farooq and Hafeez [91] transfer weights from a pre-trained ResNet50, Wang et al. [92] explore the Inception model, and Afshar et al. [93] deploy a Capsule Network. We do not report performance metrics such as accuracy, precision, or recall reported in these papers due to experimentation with extremely small datasets. All of these papers report gains with transfer learning compared to random weight initializations.

Raghu et al. [83] report a more sobering view of Transfer Learning for Medical Image Analysis. Most notably, they show that as medical imaging tasks collect more data, there is little to no benefit in ImageNet pre-training. On larger datasets such as Retina and ChexPert, there is no significant difference in performance when using Transfer Learning. However, Transfer Learning does improve performance in the small data regime. Small data is defined in this study as 5000 images. In the interest of COVID-19 applications, we note that this small data benefit is extremely important. However, If COVID-19 continues to spread and more positive cases are collected, we do not expect transfer learning from ImageNet to continue to be useful. We note this is heavily related to the domain mismatch between ImageNet and chest radiographs. We do expect transfer learning to continue to be effective from datasets such as CheXpert [7].

Transfer learning usually describes supervised learning on one dataset, and then using these weights to initialize supervised learning on another dataset. Transfer learning can also refer to using self-supervised learning on one dataset, or other learning variants we mentioned in our Introduction. There have been many promising advancements in self-supervised representation learning. For our COVID-19 applications, we will consider the use of contrastive self-supervised learning. This learning algorithm pushes representations of positive pairs to be close together and negative pairs far apart. The emerging practice in contrastive self-supervised learning [94, 95] is to form positive pairs from views of an image, where a view is a data augmentation transformation of the image [96]. MoCo [94] and SimCLR [95] are two of the most promising models for this. MoCo is generally preferred due to memory efficiency.

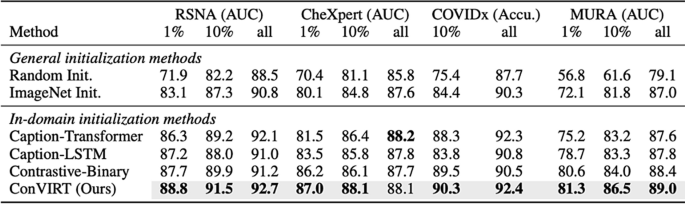

Zhang et al. [97] take a unique multi-modal approach to construct this learning task. They look to the short text descriptions that are paired with medical images. These annotations are much less detailed than the high-quality annotations that bottleneck the data collection process. Researchers have looked at how these text descriptions can facilitate visual representation learning, but rule-based label extraction is often inaccurate and limited to a few categories, and more generally these rules are domain-specific and sensitive to the style of text. Zhang et al. leverage this text to form image-text pairs with the medical images, naming their algorithm Contrastive Visual Representation Learning from Text (ConVIRT).

The improvements of fine-tuning ConVIRT on different medical image datasets, such as COVIDx, compared to ImageNet initialization and other techniques is shown in Fig. 11. In the setting with 1% of the CheXpert labels, transfer learning from ConVIRT achieves an AUC of 87.0 compared to 80.1 from ImageNet pre-training with supervised learning. With 10% of the COVIDx data, ConVIRT achieves 90.3% accuracy compared to 84.4%. Although more promising than ImageNet transfer learning with supervised learning, with more labeled data, even ConViRT begins to show modest gains to random initialization. Sowirijan et al. [98] also present massive gains of the MoCo representation learning scheme. However, they only report results on the CheXpert dataset.

AUC and Accuracy performance gains from ConVIRT

Even in their takedown of ImageNet-based transfer learning for Medical Image Analysis, Raghu et al. [83] note transfer performance differences by redesigning computational blocks in the ResNet. As mentioned previously, architecture design is one of the most promising trends in Deep Computer Vision research. Subtle architectures changes such as the arrangements of Skip-connections in DenseNet [99] can have a significant performance gain over a more standard ResNet [100] model. Researchers have turned to parameterizing a discrete search space of candidate architectures and searching through it for a suitable architecture. This search is usually done with evolutionary search [101] or reinforcement learning [102]. Wang et al. [42] turn to this research in the development of their COVID-Net for chest X-ray diagnosis. The authors design a macro, high level architecture structure, and a micro set of operations such as 7 × 7 vs. 1 × 1 convolutions, and combine them using a generative synthesis search algorithm. This results in a 3% accuracy improvement over a more standard ResNet-50 architecture.

From our literature review, we believe that representation learning schemes and architecture design are the most salient areas for exploration. We additionally believe that multi-task learning with Semantic Segmentation could improve representation learning. However, multi-task learning can be challenging to implement due to conflicting gradient directions with updates. Collecting labeled data for training Semantic Segmentation models can be extremely tedious as well. Shan et al. [103] present an interesting human-in-the-loop strategy for labeling the pixels in COVID-19 CT scans. We also refer readers to an investigation from Gozes et al. [104] that uses Medical Image Analysis to explore COVID-19 disease progression over time. Finally, we think there may be value in exploring differential diagnosis with the supervised learning label space. This describes using tree structured labels. These labels reward the model if at least recognizes a parent node with the more specific, ground truth diagnosis. This strategy is generally known as Neural Structured Learning [105].

Ambient Intelligence